LILA BC (Labeled Information Library of Alexandria: Biology and Conservation)

LILA BC is a repository for data sets related to biology and conservation, intended as a resource for both machine learning (ML) researchers and those that want to harness ML for biology and conservation. LILA BC is intended to host data from a variety of modalities, but emphasis is placed on labeled images; we currently host over ten million labeled images.LILA BC

The Labeled Information Library of Alexandria: Biology and Conservation

Overview

LILA BC is a repository for data sets related to biology and conservation, intended as a resource for both machine learning researchers and those that want to harness ML for biology and conservation. All datasets are available within the following S3 folder:

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife

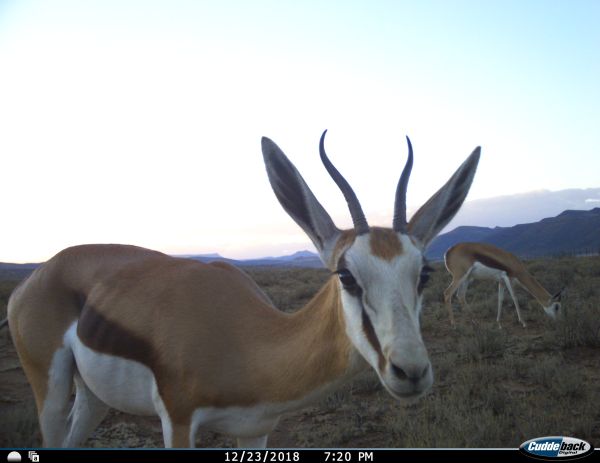

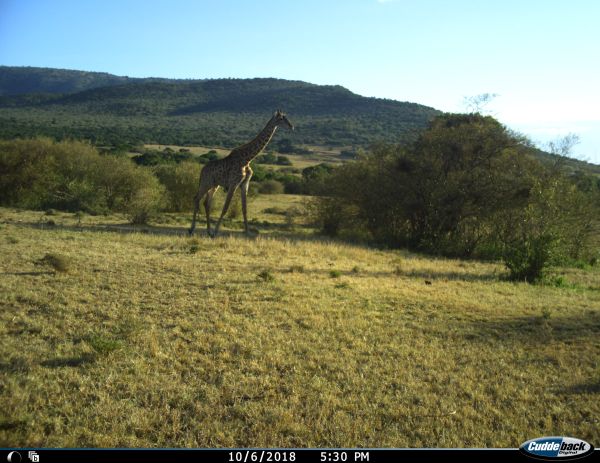

About half of the datasets on LILA contain images from motion-triggered wildlife cameras (aka “camera traps”); those datasets are interoperable, use a consistent metadata format, and have been mapped to a common taxonomy, such that although they are presented as separate datasets, all the camera trap datasets can be treated as a single dataset for training and evaluating ML models. Consequently, this collection is divided into two sections: camera trap datasets and non-camera-trap datasets. More information about the harmonization of camera trap datasets on LILA is available here.

We ask that if you use a data set hosted on LILA BC, you give credit to the data set owner in the manner listed in the data set’s documentation.

For more information, or to inquire about adding a data set, email info@lila.science.

We also maintain a list of other labeled data sets related to conservation.

LILA BC is maintained by a working group that includes representatives from Ecologize, Zooniverse, the Evolving AI Lab, and Snapshot Safari.

Data is available on AWS, GCP, and Azure. Hosting on AWS is provided by Source Cooperative. Hosting on Google Cloud is provided by the Google Cloud Public Datasets program. Hosting on Microsoft Azure is provided by the Microsoft AI for Good Lab.

Table of contents

- North American Camera Trap Images

- Caltech Camera Traps

- Wellington Camera Traps

- Missouri Camera Traps

- WCS Camera Traps

- Snapshot Serengeti

- ENA24-detection

- Snapshot Kruger

- Snapshot Mountain Zebra

- Snapshot Camdeboo

- Snapshot Enonkishu

- Snapshot Kgalagadi

- Snapshot Karoo

- Island Conservation Camera Traps

- Channel Islands Camera Traps

- Idaho Camera Traps

- SWG Camera Traps 2018-2020

- Orinoquía Camera Traps

- Lindenthal Camera Traps

- New Zealand Wildlife Thermal Imaging

- Trail Camera Images of New Zealand Animals

- Desert Lion Conservation Camera Traps

- Ohio Small Animals

- Snapshot Safari 2024 Expansion

- Seattle(ish) Camera Traps

- UNSW Predators

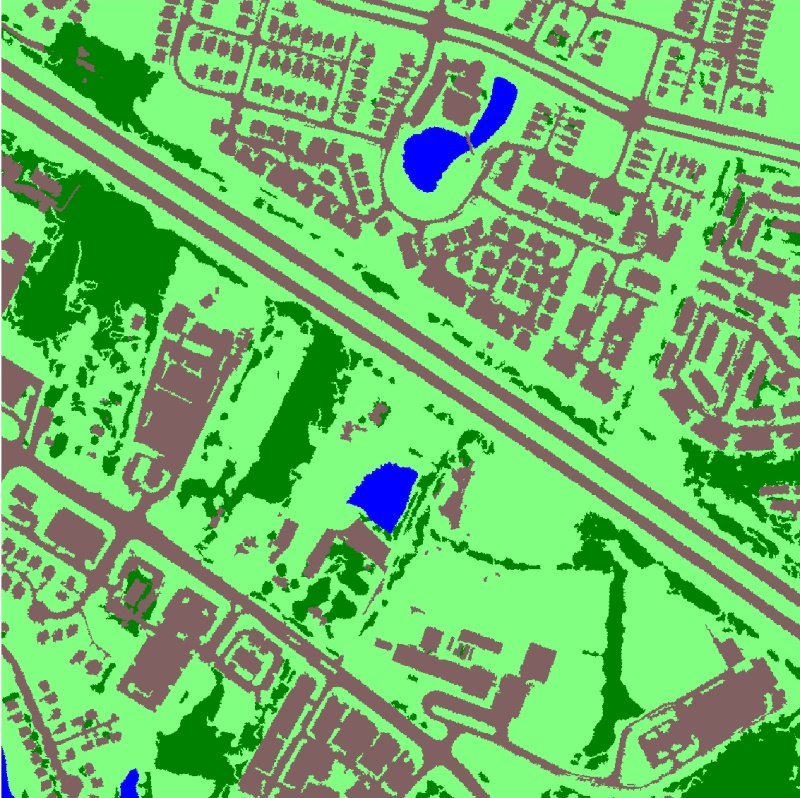

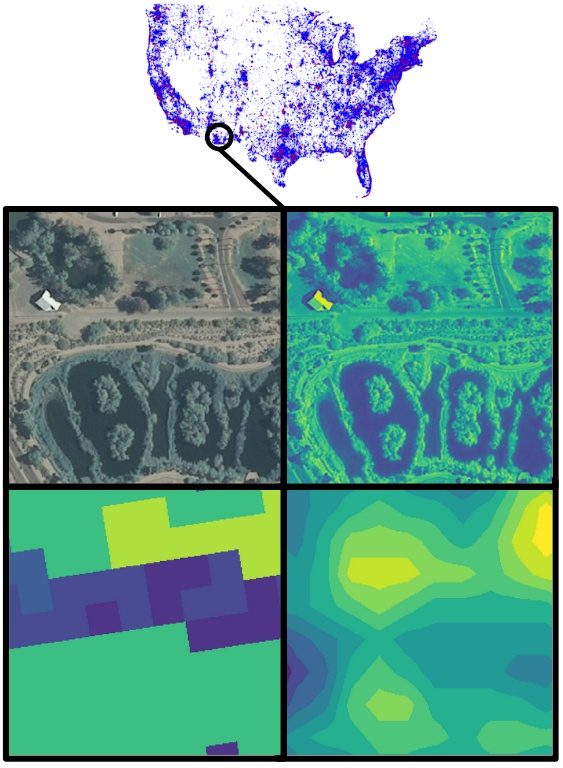

- Chesapeake Land Cover

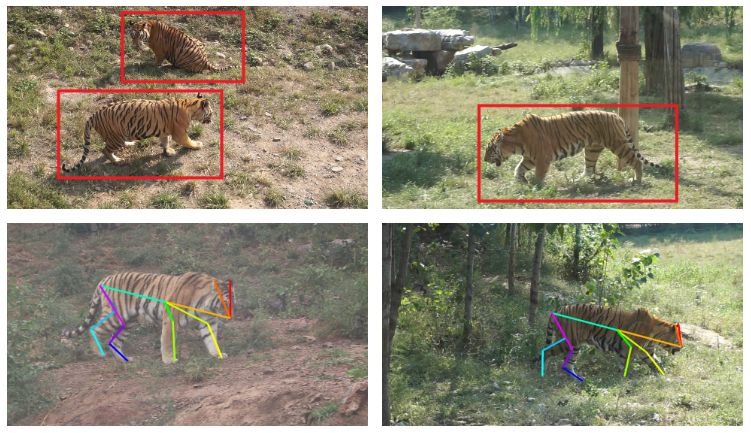

- Amur Tiger Re-identification

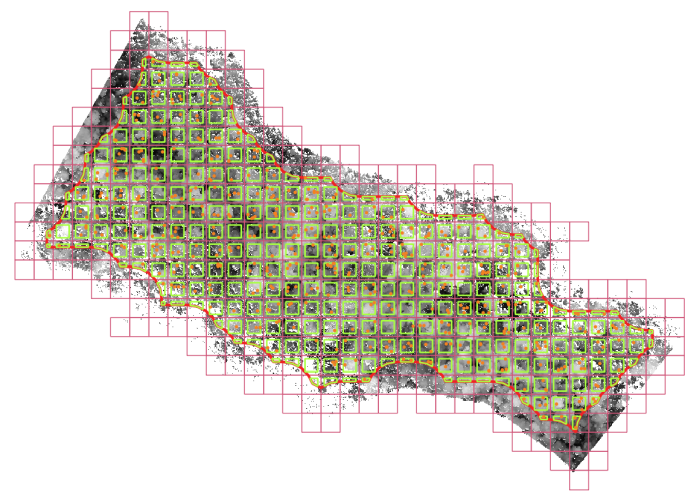

- Conservation Drones

- Forest Canopy Height in Mexican Ecosystems

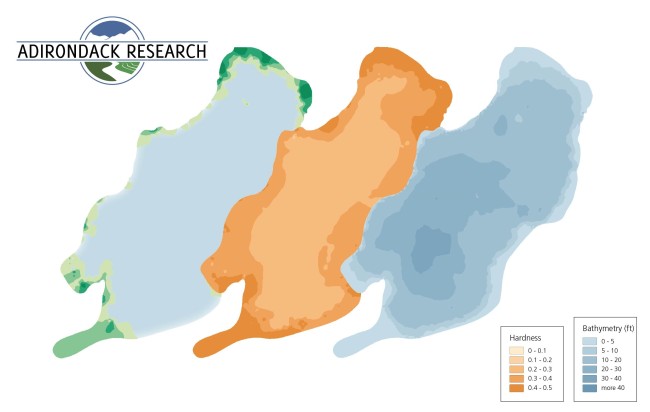

- Adirondack Research Invasive Species Mapping

- Whale Shark ID

- Great Zebra and Giraffe Count and ID

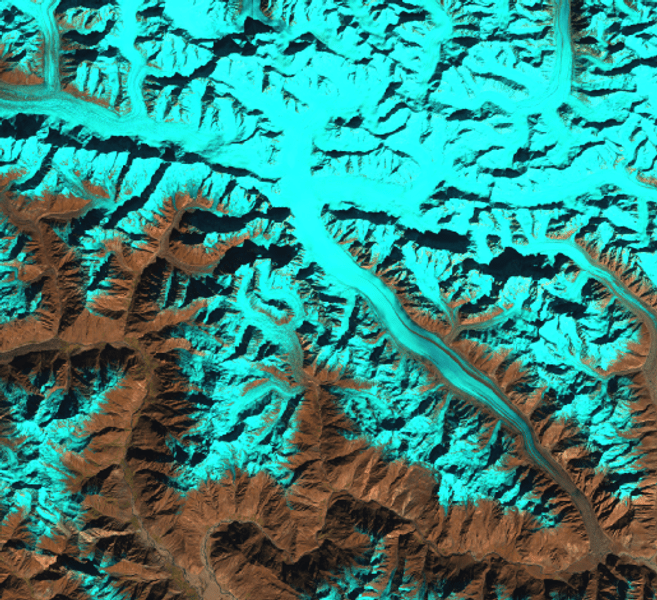

- HKH Glacier Mapping

- Aerial Seabirds West Africa

- GeoLifeCLEF 2020

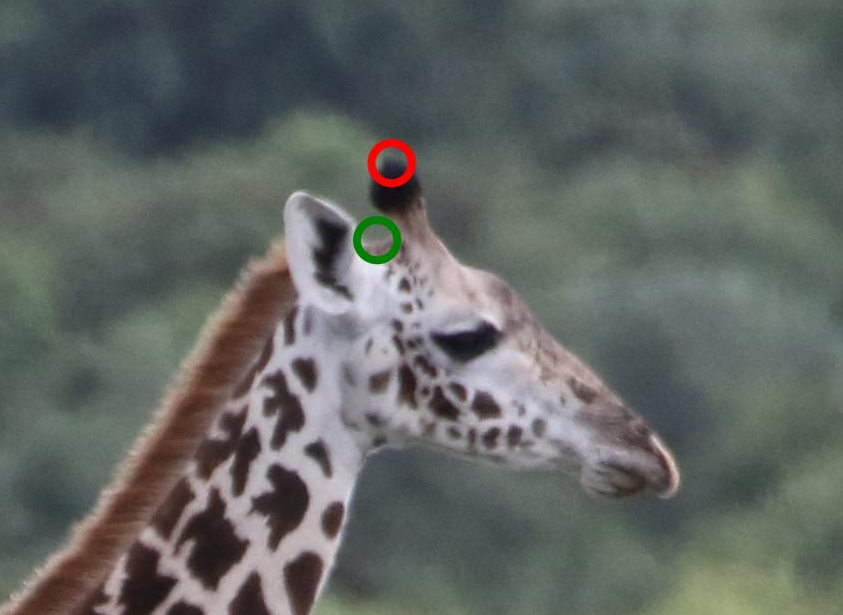

- WNI Giraffes

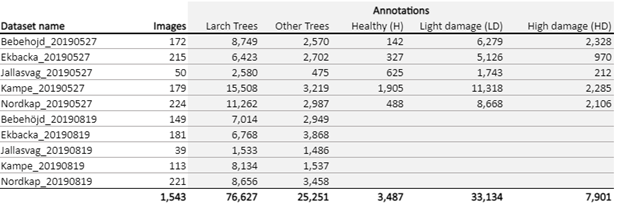

- Forest Damages - Larch Casebearer

- Boxes on Bees and Pollen

- NOAA Arctic Seals 2019

- Leopard ID 2022

- Hyena ID 2022

- Beluga ID 2022

- NOAA Puget Sound Nearshore Fish 2017-2018

- Izembek Lagoon Waterfowl

- Sea Star Re-ID 2023

- UAS Imagery of Migratory Waterfowl at New Mexico Wildlife Refuges

Camera trap datasets

North American Camera Trap Images

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nacti-unzipped

Overview

This data set contains 3.7M camera trap images from five locations across the United States, with labels for 28 animal categories, primarily at the species level (for example, the most common labels are cattle, boar, and red deer). Approximately 12% of images are labeled as empty. We have also added bounding box annotations to 8892 images (mostly vehicles and birds).

Citation, license, and contact information

More information about this data set is available in the associated manuscript:

Tabak MA, Norouzzadeh MS, Wolfson DW, Sweeney SJ, VerCauteren KC, Snow NP, Halseth JM, Di Salvo PA, Lewis JS, White MD, Teton B. Machine learning to classify animal species in camera trap images: Applications in ecology. Methods in Ecology and Evolution. 2019 Apr;10(4):585-90.

Please cite this manuscript if you use this data set.

This data set is released under the Community Data License Agreement (permissive variant).

For questions about this data set, contact northamericancameratrapimages@gmail.com.

Data format

Annotations are provided in COCO Camera Traps .json format.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/nacti-unzipped (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nacti-unzipped (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/nacti-unzipped (Azure)

Links to a series of zipfiles are also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

GCP links

Images (1/4) (488GB)

Images (2/4) (343GB)

Images (3/4) (347GB)

Images (4/4) (191GB)

Metadata (.json) (44MB)

Metadata (.csv) (31MB)

Bounding boxes (363KB)

Azure links

Images (1/4) (488GB)

Images (2/4) (343GB)

Images (3/4) (347GB)

Images (4/4) (191GB)

Metadata (.json) (44MB)

Metadata (.csv) (31MB)

Bounding boxes (363KB)

AWS links

Images (1/4) (488GB)

Images (2/4) (343GB)

Images (3/4) (347GB)

Images (4/4) (191GB)

Metadata (.json) (44MB)

Metadata (.csv) (31MB)

Bounding boxes (363KB)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Caltech Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/caltech-unzipped

Overview

This data set contains 243,100 images from 140 camera locations in the Southwestern United States, with labels for 21 animal categories (plus empty), primarily at the species level (for example, the most common labels are opossum, raccoon, and coyote), and approximately 66,000 bounding box annotations. Approximately 70% of images are labeled as empty.

More information about this data set is available here.

Citation, license, and contact information

If you use this data set, please cite the associated manuscript:

Sara Beery, Grant Van Horn, Pietro Perona. Recognition in Terra Incognita. Proceedings of the 15th European Conference on Computer Vision (ECCV 2018). (bibtex)

This data set is released under the Community Data License Agreement (permissive variant).

For questions about this data set, contact caltechcameratraps@gmail.com.

Data format

Annotations are provided in COCO Camera Traps .json format.

We have also divided locations (i.e., cameras) into training and validation splits to allow for consistent benchmarking on this data set. The file describing this split specifies a train/val split for all locations in the data set, and also provides the train/val split used in the ECCV paper listed above. The "eccv_train" split here corresponds to the "train" locations and all "cis" locations in the ECCV paper; the "eccv_val" split here corresponds to all "trans" locations in the ECCV paper.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/caltech-unzipped/cct_images (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/caltech-unzipped/cct_images (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/caltech-unzipped/cct_images (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

- Images (105GB) (GCP link) (Azure link) (AWS link)

Metadata download links:

- Image-level annotations (9MB)

- Bounding box annotations (35MB)

- Recommended train/val splits (4KB)

Having trouble downloading? Check out our FAQ.

CCT20 Benchmark subset

The initial publication of this data set in Beery et al. 2018 proposed a specific subset of Caltech Camera Traps data for benchmarking. Images in this benchmark dataset (CCT20) have been downsized to a maximum of 1024 pixels on a side.

The CCT20 benchmark set is available here:

Benchmark images (6GB)

Metadata files for train/val/cis/trans splits (3MB)

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Wellington Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/wellington-unzipped

Overview

This data set contains 270,450 images from 187 camera locations in Wellington, New Zealand. The cameras (Bushnell 119537, 119476, and 119436) recorded sequences of three images when triggered. Each sequence was labelled by citizen scientists and/or professional ecologists from Victoria University of Wellington into 17 classes: 15 animal categories (for example, the most common labels are bird, cat, and hedgehog), empty, and unclassifiable. Approximately 17% of images are labeled as empty. Images within each sequence share the same species label (even though the animal may not have been recorded in all three images).

Citation, license, and contact information

If you use this data set, please cite the associated manuscript:

Victor Anton, Stephen Hartley, Andre Geldenhuis, Heiko U Wittmer 2018. Monitoring the mammalian fauna of urban areas using remote cameras and citizen science. Journal of Urban Ecology; Volume 4, Issue 1.

This data set is released under the Community Data License Agreement (permissive variant).

For questions about this data set, contact Victor Anton.

Data format

Annotations are provided in .csv format as well as in COCO Camera Traps .json format. In the .csv format, empty images are referred to as “nothinghere”; in the .json format, empty images are referred to as “empty” for consistency with other data sets on this site.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/wellington-unzipped/images (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/wellington-unzipped/images (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/wellington-unzipped/images (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Download links:

GCP links: images (176GB), .json metadata (8MB), .csv metadata (4MB)

Azure links: images (176GB), .json metadata (8MB), .csv metadata (4MB)

AWS links: images (176GB), .json metadata (8MB), .csv metadata (4MB)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Missouri Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/missouricameratraps

Overview

This data set contains approximately 25,000 camera trap images representing 20 species (for example, the most common labels are red deer, mouflon, and white-tailed deer). Images within each sequence share the same species label (even though the animal may not have been recorded in all the images in the sequence). Around 900 bounding boxes are included. These are very challenging sequences with highly cluttered and dynamic scenes. Spatial resolutions of the images vary from 1920 × 1080 to 2048 × 1536. Sequence lengths vary from 3 to more than 300 frames.

Citation, license, and contact information

If you use this data set, please cite the associated manuscript:

Zhang, Z., He, Z., Cao, G., & Cao, W. (2016). Animal detection from highly cluttered natural scenes using spatiotemporal object region proposals and patch verification. IEEE Transactions on Multimedia, 18(10), 2079-2092. (bibtex)

This data set is released under the Community Data License Agreement (permissive variant).

For questions about this data set, contact Hayder Yousif and Zhi Zhang.

Update: it appears that those email addresses no longer work, but I don't feel quite right removing them from the page, so I'm leaving them crossed out for posterity. For most questions about this dataset, info@lila.science can help.

Data format

Annotations are provided in the COCO Camera Traps .json format used for most data sets on lila.science. Note that due to some issues in the source data (see below), bounding boxes are accurate, but for images that have multiple individuals in them, a bounding box is present for only one. For these images, we have added a non-standard field to the .json file ("n_boxes") which indicates the number of animals that actually exist in the image, even though a bounding box is present for only one. We hope to fix this at some point, and it's only 79 images, and we know exactly which ones they are (here's a list), so if someone wants to send us updated bounding boxes for those images, we will (a) update the .json file and (b) buy you a fancy coffee.

Annotations are also provided in the whitespace-delimited text format used by the authors (inside the zipfile, along with a README documenting its format), though we recommend using the .json file; the filenames in the text file don't correspond precisely to the image filenames, and (as per above) most images that have multiple animals include redundant bounding boxes, rather than multiple unique bounding boxes.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/missouricameratraps/images (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/missouricameratraps/images (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/missouricameratraps/images (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Download links:

Images (10GB) (GCP link) (Azure link) (AWS link)

Metadata (.json, 1MB) (GCP link) (Azure link) (AWS link)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

WCS Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/wcs-unzipped

Overview

This data set contains approximately 1.4M camera trap images representing around 675 species from 12 countries, making it one of the most diverse camera trap data sets available publicly. Data were provided by the Wildlife Conservation Society. The most common classes are tayassu pecari (peccary), meleagris ocellata (ocellated turkey), and bos taurus (cattle). A complete list of classes and associated image counts is available here. Approximately 50% of images are empty. We have also added approximately 375,000 bounding box annotations to approximately 300,000 of those images, which come from sequences covering almost all locations.

Sequences are inferred from timestamps, so may not strictly represent bursts. Images were labeled at a combination of image and sequence level, so – as is the case with most camera trap data sets – empty images may be labeled as non-empty (if an animal was present in one frame of a sequence but not in others). Images containing humans are referred to in metadata, but are not included in the data files.

Contact information

This data set is released under the Community Data License Agreement (permissive variant).

Data format

Annotations are provided in the COCO Camera Traps .json format used for most data sets on lila.science.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Accessing the data

Class-level annotations are available here:

Bounding box annotations are available here:

wcs_20220205_bboxes_with_classes.zip (with the same classes as the class-level labels)

wcs_20220205_bboxes_no_classes.zip (with just animal/person/vehicle labels)

Recommended train/val/test splits are available here:

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/wcs-unzipped (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/wcs-unzipped (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/wcs-unzipped (Azure)

We recommend downloading images (the whole folder, or a subset of the folder) using gsutil (for GCP), aws s3 (for AWS), or AzCopy (for Azure). For more information about using gsutil, aws s3, or AzCopy, check out our guidelines for accessing images without using giant zipfiles.

If you prefer to download images via http, you can. For example, one image (with lots of birds) appears in the metadata as:

animals/0011/0009.jpg

This image can be downloaded directly from any of the following URLs (one for each cloud):

https://storage.googleapis.com/public-datasets-lila/wcs-unzipped/animals/0011/0009.jpg

https://lilawildlife.blob.core.windows.net/lila-wildlife/wcs-unzipped/animals/0011/0009.jpg

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Snapshot Serengeti

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshotserengeti-unzipped

Overview

This data set contains approximately 2.65M sequences of camera trap images, totaling 7.1M images, from seasons one through eleven of the Snapshot Serengeti project, the flagship project of the Snapshot Safari network. Using the same camera trapping protocols at every site, Snapshot Safari members are collecting standardized data from many protected areas in Africa, which allows for cross-site comparisons to assess the efficacy of conservation and restoration programs. Serengeti National Park in Tanzania is best known for the massive annual migrations of wildebeest and zebra that drive the cycling of its dynamic ecosystem.

Labels are provided for 61 categories, primarily at the species level (for example, the most common labels are wildebeest, zebra, and Thomson's gazelle). Approximately 76% of images are labeled as empty. A full list of species and associated image counts is available here. We have also added approximately 150,000 bounding box annotations to approximately 78,000 of those images.

Additional data from this project is available as part of the Snapshot Safari 2024 Expansion dataset.

Citation, license, and contact information

The images and species-level labels are described in more detail in the associated manuscript:

Swanson AB, Kosmala M, Lintott CJ, Simpson RJ, Smith A, Packer C (2015) Snapshot Serengeti, high-frequency annotated camera trap images of 40 mammalian species in an African savanna. Scientific Data 2: 150026. (DOI) (bibtex)

Please cite this manuscript if you use this data set.

For questions about this data set, contact Sarah Huebner at the University of Minnesota.

This data set is released under the Community Data License Agreement (permissive variant).

The original Snapshot Serengeti data set included a "human" class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps .json format. .json files are provided for each season, and a single .json file is also provided for all seasons combined. Note that annotations are tied to images, but are only reliable at the sequence level. For example, there are rare sequences in which two of three images contain a lion, but the third is empty (lions, it turns out, walk away sometimes), but all three images would be annotated as "lion".

Annotations are also provided in a (non-standard) .csv format. These are intended to allow replication of the original dataset paper, but they have not been maintained as diligently as the .json files and their format has not been documented, so unless you have a strong reason to use the .csv files, we recommend using the .json files.

Additional metadata related to the aggregation of human labels into consensus labels is available in an addendum.

We have also divided locations (i.e., cameras) into training and validation splits to allow for consistent benchmarking on this data set.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/snapshotserengeti-unzipped (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshotserengeti-unzipped (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/snapshotserengeti-unzipped (Azure)

A link to a zipfile per season is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Data download links:

Season 1 (242GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 2 (382GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 3 (25`GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 4 (368GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 5 (596GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 6 (361GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 7 (636GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 8 (part 1) (450GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 8 (part 2) (414GB) (images, GCP) (images, Azure) (images, AWS)

Season 9 (part 1) (432GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 9 (part 2) (432GB) (images, GCP) (images, Azure) (images, AWS)

Season 10 (part 1) (500GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Season 10 (part 2) (166GB) (images, GCP) (images, Azure) (images, AWS)

Season 11 (479GB) (images, GCP) (images, Azure) (images, AWS) (metadata)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

ENA24-detection

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/ena24

Overview

This data set contains approximately 10,000 camera trap images representing 23 classes from Eastern North America, with bounding boxes on each image. The most common classes are "American Crow", "American Black Bear", and "Dog".

Citation, license, and contact information

This data set is released under the Community Data License Agreement (permissive variant).

The data set is described in more detail in the associated manuscript:

Yousif H, Kays R, Zhihai H. Dynamic Programming Selection of Object Proposals for Sequence-Level Animal Species Classification in the Wild. IEEE Transactions on Circuits and Systems for Video Technology, 2019. (bibtex)

Please cite this manuscript if you use this data set.

For questions about this data set, contact Hayder Yousif.

Data format

Annotations are provided in the COCO Camera Traps .json format used for most data sets on lila.science. Images containing humans were removed from the data set, but the metadata still contains information about those images.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud folders:

- gs://public-datasets-lila/ena24/images (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/ena24/images (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/ena24/images (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Download links:

Images (GCP, 3.6GB)

Metadata (GCP, 3.6MB)

Images (Azure, 3.6GB)

Metadata (Azure 3.6MB)

Images (AWS, 3.6GB)

Metadata (AWS, 3.6MB)

An "unofficial" version of the metadata file that only includes annotations for images that are present in the public data set (i.e., from which metadata for images of humans has been removed) is available at:

Metadata (non-human images only) (2.9MB)

It is not guaranteed that this version will be maintained across changes to the underlying data set, but we're like 99.9999999% sure that if you're reading this, it's still accurate.

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Snapshot Kruger

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/KRU

Overview

This data set contains 4747 sequences of camera trap images, totaling 10072 images, from the Snapshot Kruger project, part of the Snapshot Safari network. Using the same camera trapping protocols at every site, Snapshot Safari members are collecting standardized data from many protected areas in Africa, which allows for cross-site comparisons to assess the efficacy of conservation and restoration programs. Kruger National Park, South Africa has been a refuge for wildlife since its establishment in 1898, and it houses one of the most diverse wildlife assemblages remaining in Africa. The Snapshot Safari grid was established in 2018 as part of a research project assessing the impacts of large mammals on plant life as boundary fences were removed and wildlife reoccupied areas of previous extirpation.

Labels are provided for 46 categories, primarily at the species level (for example, the most common labels are impala, elephant, and buffalo). Approximately 61.60% of images are labeled as empty. A full list of species and associated image counts is available here.

Additional data from this project is available as part of the Snapshot Safari 2024 Expansion dataset.

Citation, license, and contact information

For questions about this data set, contact Sarah Huebner at the University of Minnesota.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a "human" class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps .json format, as well as .csv format. Note that annotations in the .json format are tied to images, but are only reliable at the sequence level. For example, there are rare sequences in which two of three images contain a lion, but the third is empty (lions, it turns out, walk away sometimes), but all three images would be annotated as "lion".

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud folders:

- gs://public-datasets-lila/snapshot-safari/KRU/KRU_public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/KRU/KRU_public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/snapshot-safari/KRU/KRU_public (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Data download links:

Season 1 images (28GB) (.json metadata) (.csv metadata) (GCP)

Season 1 images (28GB) (.json metadata) (.csv metadata) (Azure)

Season 1 images (28GB) (.json metadata) (.csv metadata) (AWS)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Snapshot Mountain Zebra

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/MTZ

Overview

This data set contains 71688 sequences of camera trap images, totaling 73034 images, from the Snapshot Mountain Zebra project, part of the Snapshot Safari network. Using the same camera trapping protocols at every site, Snapshot Safari members are collecting standardized data from many protected areas in Africa, which allows for cross-site comparisons to assess the efficacy of conservation and restoration programs. Mountain Zebra National Park is located in the Eastern Cape of South Africa in a transitional area between several distinct biomes, which means it is home to many endemic species. As the name suggests, this park contains the largest remnant population of Cape Mountain zebras, ~700 as of 2019 and increasing steadily every year.

Labels are provided for 54 categories, primarily at the species level (for example, the most common labels are zebramountain, kudu, and springbok). Approximately 91.23% of images are labeled as empty. A full list of species and associated image counts is available here.

Additional data from this project is available as part of the Snapshot Safari 2024 Expansion dataset.

Citation, license, and contact information

For questions about this data set, contact Sarah Huebner at the University of Minnesota.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a "human" class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps .json format, as well as .csv format. Note that annotations in the .json format are tied to images, but are only reliable at the sequence level. For example, there are rare sequences in which two of three images contain a lion, but the third is empty (lions, it turns out, walk away sometimes), but all three images would be annotated as "lion".

For information about mapping this dataset's categories to a common taxonomy, see this page.

Images are available in the following cloud folders:

- gs://public-datasets-lila/snapshot-safari/MTZ/MTZ_public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/MTZ/MTZ_public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/snapshot-safari/MTZ/MTZ_public (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Data download links:

Season 1 images (109GB) (.json metadata) (.csv metadata) (GCP)

Season 1 images (109GB) (.json metadata) (.csv metadata) (Azure)

Season 1 images (109GB) (.json metadata) (.csv metadata) (AWS)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Snapshot Camdeboo

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/CDB

Overview

This data set contains 12132 sequences of camera trap images, totaling 30227 images, from the Snapshot Camdeboo project, part of the Snapshot Safari network. Using the same camera trapping protocols at every site, Snapshot Safari members are collecting standardized data from many protected areas in Africa, which allows for cross-site comparisons to assess the efficacy of conservation and restoration programs. Camdeboo National Park, South Africa is crucial habitat for many birds on a global scale, with greater than fifty endemic and near-endemic species and many migratory species.

Labels are provided for 43 categories, primarily at the species level (for example, the most common labels are kudu, springbok, and ostrich). Approximately 43.74% of images are labeled as empty. A full list of species and associated image counts is available here.

Additional data from this project is available as part of the Snapshot Safari 2024 Expansion dataset.

Citation, license, and contact information

For questions about this data set, contact Sarah Huebner at the University of Minnesota.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a "human" class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps .json format, as well as .csv format. Note that annotations in the .json format are tied to images, but are only reliable at the sequence level. For example, there are rare sequences in which two of three images contain a lion, but the third is empty (lions, it turns out, walk away sometimes), but all three images would be annotated as "lion".

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud folders:

- gs://public-datasets-lila/snapshot-safari/CDB/CDB_public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/CDB/CDB_public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/snapshot-safari/CDB/CDB_public (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Data download links:

Season 1 images (33GB) (.json metadata) (.csv metadata) (GCP)

Season 1 images (33GB) (.json metadata) (.csv metadata) (Azure)

Season 1 images (33GB) (.json metadata) (.csv metadata) (AWS)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Snapshot Enonkishu

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/ENO

Overview

This data set contains 13301 sequences of camera trap images, totaling 28544 images, from the Snapshot Enonkishu project, part of the Snapshot Safari network. Using the same camera trapping protocols at every site, Snapshot Safari members are collecting standardized data from many protected areas in Africa, which allows for cross-site comparisons to assess the efficacy of conservation and restoration programs. Enonkishu Conservancy is located on the northern boundary of the Mara-Serengeti ecosystem in Kenya, and is managed by a consortium of stakeholders and land-owning Maasai families. Their aim is to promote coexistence between wildlife and livestock in order to encourage regenerative grazing and build stability in the Mara conservancies.

Labels are provided for 39 categories, primarily at the species level (for example, the most common labels are impala, warthog, and zebra). Approximately 64.76% of images are labeled as empty. A full list of species and associated image counts is available here.

Additional data from this project is available as part of the Snapshot Safari 2024 Expansion dataset.

Citation, license, and contact information

For questions about this data set, contact Sarah Huebner at the University of Minnesota.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a "human" class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps .json format, as well as .csv format. Note that annotations in the .json format are tied to images, but are only reliable at the sequence level. For example, there are rare sequences in which two of three images contain a lion, but the third is empty (lions, it turns out, walk away sometimes), but all three images would be annotated as "lion".

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud folders:

- gs://public-datasets-lila/snapshot-safari/ENO/ENO_public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/ENO/ENO_public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/snapshot-safari/ENO/ENO_public (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Data download links:

Season 1 images (32GB) (.json metadata) (.csv metadata) (GCP)

Season 1 images (32GB) (.json metadata) (.csv metadata) (Azure)

Season 1 images (32GB) (.json metadata) (.csv metadata) (AWS)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Snapshot Kgalagadi

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/KGA

Overview

This data set contains 3611 sequences of camera trap images, totaling 10222 images, from the Snapshot Kgalagadi project, part of the Snapshot Safari network. Using the same camera trapping protocols at every site, Snapshot Safari members are collecting standardized data from many protected areas in Africa, which allows for cross-site comparisons to assess the efficacy of conservation and restoration programs. The Kgalagadi Transfrontier Park stretches from the Namibian border across South Africa and into Botswana, covering a landscape commonly referred to as the Kalahari – an arid savanna. This region is of great interest to help us understand how animals cope with extreme temperatures at both ends of the scale.

Labels are provided for 31 categories, primarily at the species level (for example, the most common labels are gemsbokoryx, birdother, and ostrich). Approximately 76.14% of images are labeled as empty. A full list of species and associated image counts is available here.

Additional data from this project is available as part of the Snapshot Safari 2024 Expansion dataset.

Citation, license, and contact information

For questions about this data set, contact Sarah Huebner at the University of Minnesota.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a "human" class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps .json format, as well as .csv format. Note that annotations in the .json format are tied to images, but are only reliable at the sequence level. For example, there are rare sequences in which two of three images contain a lion, but the third is empty (lions, it turns out, walk away sometimes), but all three images would be annotated as "lion".

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud folders:

- gs://public-datasets-lila/snapshot-safari/KGA/KGA_public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/KGA/KGA_public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/snapshot-safari/KGA/KGA_public (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Data download links:

Season 1 images (10GB) (.json metadata) (.csv metadata) (GCP)

Season 1 images (10GB) (.json metadata) (.csv metadata) (Azure)

Season 1 images (10GB) (.json metadata) (.csv metadata) (AWS)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Snapshot Karoo

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/KAR

Overview

This data set contains 14889 sequences of camera trap images, totaling 38074 images, from the Snapshot Karoo project, part of the Snapshot Safari network. Using the same camera trapping protocols at every site, Snapshot Safari members are collecting standardized data from many protected areas in Africa, which allows for cross-site comparisons to assess the efficacy of conservation and restoration programs. Karoo National Park, located in the arid Nama Karoo biome of South Africa, is defined by its endemic vegetation and mountain landscapes. Its unique topographical gradient has led to a surprising amount of biodiversity, with 58 mammals and more than 200 bird species recorded, as well as a multitude of reptilian species.

Labels are provided for 38 categories, primarily at the species level (for example, the most common labels are gemsbokoryx, hartebeestred, and kudu). Approximately 83.02% of images are labeled as empty. A full list of species and associated image counts is available here.

Additional data from this project is available as part of the Snapshot Safari 2024 Expansion dataset.

Citation, license, and contact information

For questions about this data set, contact Sarah Huebner at the University of Minnesota.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a "human" class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps .json format, as well as .csv format. Note that annotations in the .json format are tied to images, but are only reliable at the sequence level. For example, there are rare sequences in which two of three images contain a lion, but the third is empty (lions, it turns out, walk away sometimes), but all three images would be annotated as "lion".

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Images are available in the following cloud folders:

- gs://public-datasets-lila/snapshot-safari/KAR/KAR_public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/snapshot-safari/KAR/KAR_public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/snapshot-safari/KAR/KAR_public (Azure)

A link to a zipfile is also provided below, but - whether you want the whole data set, a specific folder, or a subset of the data (e.g. images for one species) - we recommend checking out our guidelines for accessing images without using giant zipfiles.

Data download links:

Season 1 images (40GB) (.json metadata) (.csv metadata) (GCP)

Season 1 images (40GB) (.json metadata) (.csv metadata) (Azure)

Season 1 images (40GB) (.json metadata) (.csv metadata) (AWS)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Island Conservation Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/islandconservationcameratraps

Overview

This data set contains approximately 123,000 camera trap images from 123 camera locations from 7 islands in 6 countries. Data were provided by Island Conservation during projects conducted to prevent the extinction of threatened species on islands.

The most common classes are rabbit, rat, petrel, iguana, cat, goat, and pig, with both rat and cat represented between multiple island sites representing significantly different ecosystems (tropical forest, dry forest, and temperate forests). Additionally, this data set represents data from locations and ecosystems that, to our knowledge, are not well represented in publicly available datasets including >1,000 images each of iguanas, petrels, and shearwaters. A complete list of classes and associated image counts is available here. Approximately 60% of the images are empty. We have also included approximately 65,000 bounding box annotations for about 50,000 images.

In general cameras were dispersed across each project site to detect the presence of invasive vertebrate species that threaten native island species. Cameras were set to capture bursts of photos for each motion detection event (between three and eight photos) with a set delay between events (10 to 30 seconds) to minimize the number of photos. Images containing humans are referred to in metadata, but are not included in the data files.

Citation, license, and contact information

For questions about this data set, contact David Will at Island Conservation.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a “human” class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata. If those images are important to your work, contact us; in some cases it will be possible to release those images under an alternative license.

Data format

Annotations are provided in COCO Camera Traps format. Timestamps were not present in the original data package, and have been inferred from image pixels using an OCR approach. Let us know if you see any incorrect timestamps.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Metadata is available here (5MB).

Images are available as a single zipfile:

Download from GCP (76GB)

Download from AWS (76GB)

Download from Azure (76GB)

Images are also available (unzipped) in the following cloud storage folders:

- gs://public-datasets-lila/islandconservationcameratraps/public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/islandconservationcameratraps/public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/islandconservationcameratraps/public (Azure)

We recommend downloading images (the whole folder, or a subset of the folder) using gsutil (for GCP), aws s3 (for AWS), or AzCopy (for Azure). For more information about using gsutil, aws s3, or AzCopy, check out our guidelines for accessing images without using giant zipfiles.

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Channel Islands Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/channel-islands-camera-traps

Overview

This data set contains 246,529 camera trap images from 73 camera locations in the Channel Islands, California. All animals are annotated with bounding boxes. Data were provided by The Nature Conservancy.

Animals are classified as rodent1 (82914), fox (48150), bird (11099), skunk (1071), or other (159). 114,949 images (47%) are empty.

1All images of rats were taken on islands already known to have rat populations.

Citation, license, and contact information

If you use these data in a publication or report, please use the following citation:

The Nature Conservancy (2021): Channel Islands Camera Traps 1.0. The Nature Conservancy. Dataset.

For questions about this data set, contact Nathaniel Rindlaub at The Nature Conservancy.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a “human” class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata.

Data format

Annotations are provided in COCO Camera Traps format.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Metadata is available here (18MB).

Images are available as a single zipfile:

Download from GCP (86GB)

Download from AWS (86GB)

Download from Azure (86GB)

Images are also available (unzipped) in the following cloud storage folders:

- gs://public-datasets-lila/channel-islands-camera-traps/images (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/channel-islands-camera-traps/images (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/channel-islands-camera-traps/images (Azure)

We recommend downloading images (the whole folder, or a subset of the folder) using gsutil (for GCP), aws s3 (for AWS), or AzCopy (for Azure). For more information about using gsutil, aws s3, or AzCopy, check out our guidelines for accessing images without using giant zipfiles.

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Idaho Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/idaho-camera-traps

Overview

This data set contains approximately 1.5 million camera trap images from Idaho. Labels are provided for 62 categories, most of which are animal classes ("deer", "elk", and "cattle" are the most common animal classes), but labels also include some state indicators (e.g. "snow on lens", "foggy lens"). Approximately 70.5% of images are labeled as empty. Annotations were assigned to image sequences, rather than individual images, so annotations are meaningful only at the sequence level.

The metadata contains references to images containing humans, but these have been removed from the dataset (along with images containing vehicles and domestic dogs).

Citation, license, and contact information

Images were provided by the Idaho Department of Fish and Game. No representations or warranties are made regarding the data, including but not limited to warranties of non-infringement or fitness for a particular purpose. Some information shared under this agreement may not have undergone quality assurance procedures and should be considered provisional. Images may not be sold in any format, but may be used for scientific publications. Please acknowledge the Idaho Department of Fish and Game when using images for publication or scientific communication.

Data format

Annotations are provided in COCO Camera Traps format.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Metadata is available here (25MB).

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/idaho-camera-traps/public (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/idaho-camera-traps/public (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/idaho-camera-traps/public (Azure)

We recommend downloading images (the whole folder, or a subset of the folder) using gsutil (for GCP), aws s3 (for AWS), or AzCopy (for Azure). For more information about using gsutil, aws s3, or AzCopy, check out our guidelines for accessing images without using giant zipfiles.

If you prefer to download images via http, you can. For example, the image referred to in the metadata file as:

loc_0000/loc_0000_im_000003.jpg

...can be downloaded directly from any of the following URLs (one for each cloud):

Finally, though we don't recommend downloading this way, images are also available in five giant zipfiles:

Images (part 0) (GCP) (AWS) (Azure) (300GB)

Images (part 1) (GCP) (AWS) (Azure) (300GB)

Images (part 2) (GCP) (AWS) (Azure) (300GB)

Images (part 3) (GCP) (AWS) (Azure) (300GB)

Images (part 4) (GCP) (AWS) (Azure) (250GB)

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

SWG Camera Traps 2018-2020

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/swg-camera-traps

Overview

This data set contains 436,617 sequences of camera trap images from 982 locations in Vietnam and Lao, totaling 2,039,657 images. Labels are provided for 120 categories, primarily at the species level (for example, the most common labels are "Eurasian Wild Pig", "Large-antlered Muntjac", and "Unidentified Murid"). Approximately 12.98% of images are labeled as empty. A full list of species and associated image counts is available here. 101,659 bounding boxes are provided on 88,135 images.

This data set is provided by the Saola Working Group; providers include:

- IUCN SSC Asian Wild Cattle Specialist Group’s Saola Working Group (SWG)

- Asian Arks

- Wildlife Conservation Society (Lao)

- WWF Lao

- Integrated Conservation of Biodiversity and Forests project, Lao (ICBF)

- Center for Environment and Rural Development, Vinh University, Vietnam

Citation, license, and contact information

If you use these data in a publication or report, please use the following citation:

SWG (2021): Northern and Central Annamites Camera Traps 2.0. IUCN SSC Asian Wild Cattle Specialist Group’s Saola Working Group. Dataset.

For questions about this data set, contact saolawg@gmail.com.

This data set is released under the Community Data License Agreement (permissive variant).

The original data set included a “human” class label; for privacy reasons, we have removed those images from this version of the data set. Those labels are still present in the metadata.

Data format

Annotations are provided in COCO Camera Traps format.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Accessing the data

Class-level annotations are available here:

Bounding box annotations are available here:

swg_camera_traps.bounding_boxes.with_species.zip (with the same classes as the class-level labels)

swg_camera_traps.bounding_boxes.no_species.zip (with just animal/person/vehicle labels)

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/swg-camera-traps (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/swg-camera-traps (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/swg-camera-traps (Azure)

We recommend downloading images (the whole folder, or a subset of the folder) using gsutil (for GCP), aws s3 (for AWS), or AzCopy (for Azure). For more information about using gsutil, aws s3, or AzCopy, check out our guidelines for accessing images without using giant zipfiles.

If you prefer to download images via http, you can. For example, the image referred to in the metadata file as:

public/vietnam/loc_0815/2019/06/image_00059.jpg

...can be downloaded directly from any of the following URLs (one for each cloud):

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Orinoquía Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/orinoquia-camera-traps

Overview

This data set contains 104,782 images collected from a 50-camera-trap array deployed from January to July 2020 within the private natural reserves El Rey Zamuro (31 km2) and Las Unamas (40 km2), located in the Meta department in the Orinoquía region in central Colombia. We deployed cameras using a stratified random sampling design across forest core area strata. Cameras were spaced 1 km apart from one another, located facing wildlife trails, and deployed with no bait. Images were stored and reviewed by experts using the Wildlife Insights platform.

This data set contains 51 classes, predominantly mammals such as the collared peccary, black agouti, spotted paca, white-lipped peccary, lowland tapir, and giant anteater. Approximately 20% of images are empty.

The main purpose of the study is to understand how humans, wildlife, and domestic animals interact in multi-functional landscapes (e.g., agricultural livestock areas with native forest remnants). However, this data set was also used to review model performance of AI-powered platforms – Wildlife Insights (WI), MegaDetector (MD), and Machine Learning for Wildlife Image Classification (MLWIC2). We provide a demonstration of the use of WI, MD, and MLWIC2 and R code for evaluating model performance of these platforms in the accompanying GitHub repository:

https://github.com/julianavelez1/Processing-Camera-Trap-Data-Using-AI

The metadata contains references to images containing humans, but these have been removed from the dataset.

Citation, license, and contact information

If you use these data in a publication or report, please use the following citation:

Vélez J, McShea W, Shamon H, Castiblanco‐Camacho PJ, Tabak MA, Chalmers C, Fergus P, Fieberg J. An evaluation of platforms for processing camera‐trap data using artificial intelligence. Methods in Ecology and Evolution. 2023 Feb;14(2):459-77.

For questions about this data set, contact Juliana Velez Gomez.

This data set is released under the Community Data License Agreement (permissive variant).

Data format

Annotations are provided in COCO Camera Traps format.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Metadata is available here (2.4MB).

Images are available as a single zipfile:

Download from GCP (72GB)

Download from AWS (72GB)

Download from Azure (72GB)

Images are also available (unzipped) in the following cloud storage folders:

- gs://public-datasets-lila/orinoquia-camera-traps (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/orinoquia-camera-traps (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/orinoquia-camera-traps (Azure)

We recommend downloading images (the whole folder, or a subset of the folder) using gsutil (for GCP), aws s3 (for AWS), or AzCopy (for Azure). For more information about using gsutil, aws s3, or AzCopy, check out our guidelines for accessing images without using giant zipfiles.

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Lindenthal Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/lindenthal-camera-traps

Overview

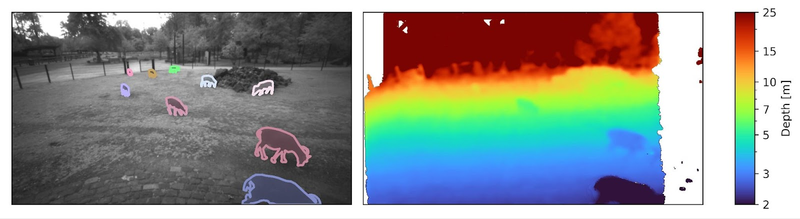

This data set contains 775 video sequences, captured in the wildlife park Lindenthal (Cologne, Germany) as part of the AMMOD project, using an Intel RealSense D435 stereo camera. In addition to color and infrared images, the D435 is able to infer the distance (or “depth”) to objects in the scene using stereo vision. Observed animals include various birds (at daytime) and mammals such as deer, goats, sheep, donkeys, and foxes (primarily at nighttime). A subset of 412 images is annotated with a total of 1038 individual animal annotations, including instance masks, bounding boxes, class labels, and corresponding track IDs to identify the same individual over the entire video.

Citation, license, and contact information

The capture process and dataset is described in more detail in the following preprint:

Haucke T, Steinhage V. Exploiting depth information for wildlife monitoring. arXiv preprint arXiv:2102.05607. 2021 Feb 10.

Please cite this manuscript if you use this data set. For questions about this data set, contact Timm Haucke at the University of Bonn.

This data set is released under the Community Data License Agreement (permissive variant).

Data format

The videos are captured using an Intel RealSense D435 stereo camera and stored as RealSense rosbag files. Each video contains intensity (RGB at daytime, IR at nighttime) and depth image streams with durations of 15 to 45 seconds at 15 frames per second. The depth images are computed on the RealSense D435 in real-time. Sequences recorded from December 17, 2020 additionally contain the raw left / right IR intensity images to facilitate offline stereo correspondence. The file names correspond to the local time, formatted as %Y%m%d%H%M%S.

A subset of videos is labeled with instance masks, bounding boxes, class labels, and track ids in the COCO JSON format. The first 20 frames of the video were skipped during annotation such that the annotated images are not affected by the automatic exposure process of the D435. After two seconds, every 10th frame was annotated with instance masks and track IDs. Together with the COCO JSON annotation files we provide the corresponding extracted still image files in JPEG (intensity) and OpenEXR (depth) format. The included Jupyter Notebook demonstrates how to load and visualize these images and the corresponding annotations.

Downloading the data

This dataset is provided as a single zipfile:

Download from GCP (213GB)

Download from AWS (213GB)

Download from Azure (213GB)

Having trouble downloading? Check out our FAQ.

New Zealand Wildlife Thermal Imaging

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nz-thermal

Overview

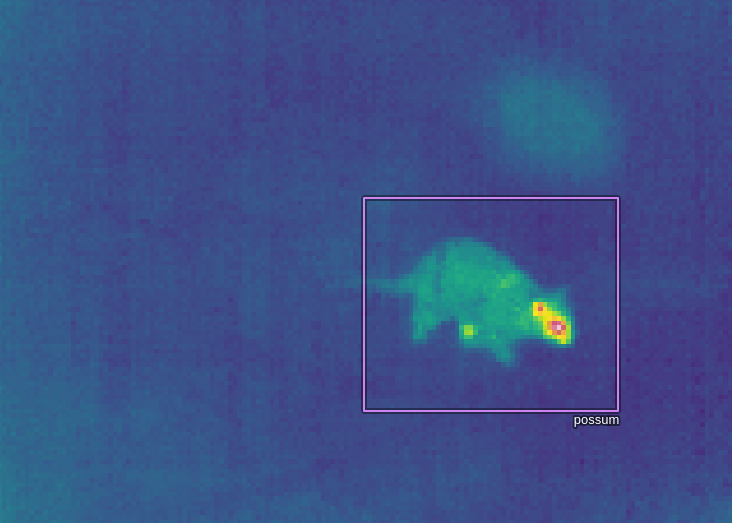

This dataset contains 121,190 thermal videos, from The Cacophony Project. The Cacophony Project focuses on monitoring native wildlife of New Zealand, with a particular emphasis on detecting and understanding the behaviour of invasive predators such as possums, rodents, cats, hedgehogs, and mustelids. The goal of this work is to conserve and restore native wildlife by eliminating these invasive species from the wild.

The videos were captured across various regions of New Zealand, mostly at night. Video capture was triggered by a change in incident heat; approximately 24,000 videos are labeled as false positives. Labels are provided for 45 categories; the most common (other than false positives) are "bird", "rodent", and "possum". A full list of labels and the number of videos associated with each label is available here (csv).

Benchmark results

Benchmark results on this dataset, with instructions for reproducing those results, are available here.

Citation, license, and contact information

For questions about this dataset, contact coredev@cacophony.org.nz at The Cacophony Project.

This data set is released under the Community Data License Agreement (permissive variant).

No citation is required, but if you find this dataset useful, or you just want to support technology that is contributing to the conservation of New Zealand's native wildlife, consider donating to The Cacophony Project.

Data format

If you prefer tinkering with a notebook to reading documentation, you may prefer to ignore this section, and go right to the sample notebook.

Three versions of each video are available:

- An mp4 video, in which the background has been estimated as the median of the clip, and each frame represents the deviation from that background. This video is referred to as the "filtered" video in the metadata described below. We think this is what most ML folks will want to use. This is not a perfectly faithful representation of the original data, since we've lost information about absolute temperature, and median-filtering isn't a perfect way to estimate background. Nonetheless, this is probably easiest to work with for 99% of ML applications. (example background-subtracted video)

- An mp4 video, in which each frame has been independently normalized. This captures the gestalt of the scene a little better, e.g. you can generally pick out trees. (example non-background-subtracted video)

- An HDF file representing the original thermal data, including - in some cases - metadata that allows the reconstruction of absolute temperature. More complicated to work with, but totally faithful to what came off of the sensor. These files are also more than ten times larger (totaling around 500GB, whereas the compressed mp4's add up to around 35GB), so they are not included in the big zipfile below. We don't expect most ML use cases to need these files.

The metadata file (.json) describes the annotations associated with each video. Annotators were not labeling whole videos with each tag, they were labeling "tracks", which are individual sequences of continuous movement within a video. So in some cases, for example, a single cat might move through the video, disappear behind a tree, and re-appear, in which case the metadata will contain two tracks with the "cat" label. The metadata contains the start/stop frames of each track and the trajectories of those tracks. Tracks are based on thresholding and clustering approaches, i.e., the tracks are themselves machine-generated, but they are generally quite reliable (other than some false positives), and the main focus of this dataset is the classification of tracks and pixels (both movement trajectory and thermal appearance can contribute to species identification), rather than improving tracking.

More specifically the main metadata file contains everything about each clip except the track coordinates, which would make the metadata file very large. Each clip also has its own .json metadata file, which has all the information available in the main metadata file about that clip, as well as the track coordinates.

Clip metadata is stored as a dictionary with an "info" field containing general information about the dataset, and a "clips" field with all the information about the videos. The "clips" field is a list of dicts, each element corresponding to a video, with fields:

- filtered_video_filename: the filename of the mp4 video that has been background-subtracted

- video_filename: the filename of the mp4 video in which each frame has been independently normalized

- hdf_filename: the filename of the HDF file with raw thermal data

- metadata_filename: the filename of the .json file with the same data included in this clip's dictionary in the main metadata file, plus the track coordinates

- width, height

- frame_rate: always 9

- error: usually None; a few HDF files were corrupted, but are kept in the metadata for book-keeping purposes, in which case *only* this field and the HDF filename are populated

- labels: a list of unique labels appearing in this video; technically redundant with information in the "tracks" field, but makes it easier to find videos of a particular category

- location: a unique identifier for the location (camera) from which this video was collected

- id: a unique identifier for this video

- calibration_frames: if non-empty, a list of frame indices in which the camera was self-calibrating; data may be less reliable during these intervals

- tracks: a list of annotated tracks, each of which is a dict with fields "start_frame", "end_frame", "points", and "tags". "tags" is a list of dicts with fields "label" and "confidence". All tags were reviewed by humans, so the "confidence" value is mostly a remnant of an AI model that was used as part of the labeling process, and these values may or may not carry meaningful information. "points" is a list of (x,y,frame) triplets. x and y are in pixels, with the origin at the upper-left of the video. As per above, the "points" array is only available in the individual-clip metadata files, not the main metadata file.

That was a lot. tl;dr: check out the sample notebook.

A recommended train/test split is available here. The train/test split is based on location IDs, but the splits are provided at the level of clip IDs. For a few categories where only a very small number of examples exist, those examples were divided across the splits (so a small number of locations appear in both train and test, but only for a couple of categories).

The format of the HDF files is described here. The script used to convert the HDF files to json/mp4 is available here.

Downloading the data

The main metadata, individual clip metadata, and mp4 videos are all available in a single zipfile:

Download metadata, mp4 videos from GCP (33GB)

Download metadata, mp4 videos from AWS (33GB)

Download metadata, mp4 videos from Azure (33GB)

If you just want to browse the main metadata file, it's available separately at:

metadata (5MB)

The HDF files are not available as a zipfile, but are available in the following cloud storage folders:

- gs://public-datasets-lila/nz-thermal/hdf (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nz-thermal/hdf (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/nz-thermal/hdf (Azure)

The unzipped mp4 files are available in the following cloud storage folders:

- gs://public-datasets-lila/nz-thermal/videos (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nz-thermal/videos (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/nz-thermal/videos (Azure)

For example, the clip with ID 1486055 is available at the following URLs (the non-background-subtracted mp4, background-subtracted mp4, and HDF file, respectively):

- https://storage.googleapis.com/public-datasets-lila/nz-thermal/videos/1486055.mp4

- https://storage.googleapis.com/public-datasets-lila/nz-thermal/videos/1486055_filtered.mp4

- https://storage.googleapis.com/public-datasets-lila/nz-thermal/hdf/1486055.hdf5

...or the following gs URLs (for use with, e.g., gsutil):

- gs://public-datasets-lila/nz-thermal/videos/1486055.mp4

- gs://public-datasets-lila/nz-thermal/videos/1486055_filtered.mp4

- gs://public-datasets-lila/nz-thermal/hdf/1486055.hdf5

Having trouble downloading? Check out our FAQ.

Neat thermal camera trap image

Trail Camera Images of New Zealand Animals

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nz-trailcams

Overview

This data set contains approximately 2.5 million camera trap images from various projects across New Zealand. These projects were run by various organizations and took place in a diverse range of habitats using a variety of trail camera brands/models. Most images have been labeled by project staff and then verified by volunteers.

Labels are provided for 97 categories, primarily at the species level. For example, the most common labels are mouse (49% of images), possum (6.7%), and rat (5.5%). No empty images are provided, but some can be made available upon request. A full list of species and associated image counts is available here.

This dataset is a subset of a larger collection; an expanded version of this data can be downloaded directly from:

s3://doc-trail-camera-footage

We will periodically update the LILA dataset to keep up with the source bucket, but if you need the latest and largest, consider downloading directly from the source.

License and contact information

For questions about this data set, contact Joris Tinnemans.

This data set is released under the Community Data License Agreement (permissive variant).

Data format

Annotations (including species tags and unique location identifiers) are provided in COCO Camera Traps format.

Species information is also present in folder names (e.g. "AIV/yellow_eyed_penguin"), and location identifiers are also available in the EXIF "ImageDescription" tag for each image.

For information about mapping this dataset's categories to a common taxonomy, see this page.

Downloading the data

Metadata is available here.

Images are available in the following cloud storage folders:

- gs://public-datasets-lila/nz-trailcams (GCP)

- s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/nz-trailcams (AWS)

- https://lilawildlife.blob.core.windows.net/lila-wildlife/nz-trailcams (Azure)

We recommend downloading images (the whole folder, or a subset of the folder) using gsutil (for GCP), aws s3 (for AWS), or AzCopy (for Azure). For more information about using gsutil, aws s3, or AzCopy, check out our guidelines for accessing images without using giant zipfiles.

If you prefer to download images via http, you can. For example, the thumbnail below appears in the metadata as:

AIV/yellow_eyed_penguin/7E7FAB4D-C1DB-4445-8CB0-4412AFE2C71D_000005.jpg

This image can be downloaded directly from any of the following URLs (one for each cloud):

Having trouble downloading? Check out our FAQ.

Other useful links

MegaDetector results for all camera trap datasets on LILA are available here.

Information about mapping camera trap datasets to a common taxonomy is available here.

Desert Lion Conservation Camera Traps

S3 base

s3://us-west-2.opendata.source.coop/agentmorris/lila-wildlife/desert-lion-camera-traps

Overview